As a snappy recap, in Section 1:

- We constructed a easy gRPC carrier for managing subjects and messages in a talk carrier (like a very easy model of Zulip, Slack, or Groups).

- gRPC supplied an easy option to constitute the services and products and operations of this app.

- We have been in a position to serve (an excessively rudimentary implementation) from localhost on an arbitrary port (9000 through default) on a customized TCP protocol.

- We have been in a position to name the strategies on those services and products each by way of a CLI software (

grpc_cli) in addition to thru generated shoppers (by way of assessments).

The benefit of this means is that any app/website online/carrier can get entry to this working server by way of a consumer (lets additionally generate JS or Swift or Java shoppers to make those calls within the respective environments).

At a excessive point, the downsides to this method to this are:

- Community get entry to – Generally a community request (from an app or a browser Jstomer to this carrier) has to traverse a number of networks over the web. Maximum networks are secured through firewalls that handiest allow get entry to to express ports and protocols (80:http, 443:https), and having this practice port (and protocol) whitelisted on each and every firewall alongside the way in which is probably not tractable.

- Discomfort with non-standard gear – Familiarity and luxury with gRPC are nonetheless nascent outdoor the service-building group. For many carrier shoppers, few issues are more uncomplicated and extra available than HTTP-based gear (cURL, HTTPie, Postman, and so forth). In a similar way, different enterprises/organizations are used to APIs uncovered as RESTful endpoints, so having to construct/combine non-HTTP shoppers imposes a finding out curve.

Use a Acquainted Quilt: gRPC-Gateway

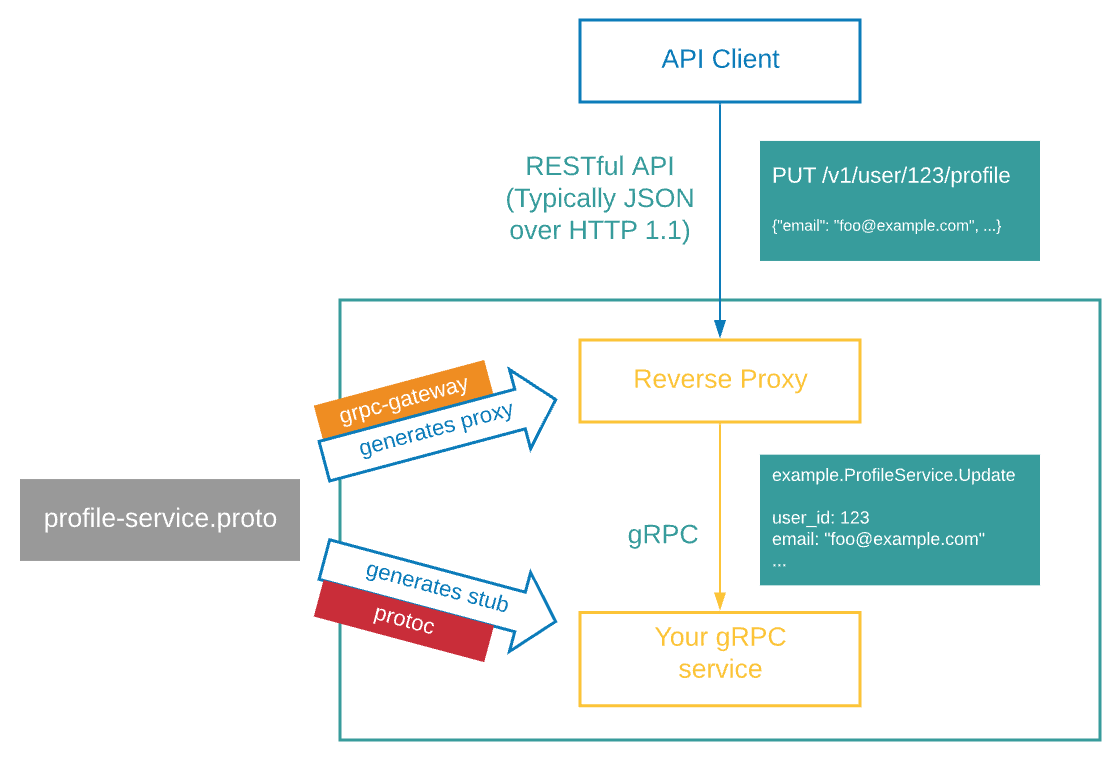

We will have the most productive of each worlds through enacting a proxy in entrance of our carrier that interprets gRPC to/from the acquainted REST/HTTP to/from the outdoor international. Given the fantastic ecosystem of plugins in gRPC simply the sort of plugin exists – the gRPC-Gateway. The repo itself incorporates an excessively in-depth set of examples and tutorials on the best way to combine it right into a carrier. On this information, we will use it on our canonical chat carrier in small increments.

An excessively high-level symbol (courtesy of gRPC-Gateway) presentations the general wrapper structure round our carrier:

This means has a number of advantages:

- Interoperability: Purchasers that want and handiest fortify HTTP(s) can now get entry to our carrier with a well-recognized facade.

- Community fortify: Maximum company firewalls and networks infrequently permit non-HTTP ports. With the gRPC-Gateway, this limitation may also be eased because the services and products are actually uncovered by way of an HTTP proxy with none loss in translation.

- Consumer-side fortify: These days, a number of client-side libraries already fortify and permit REST, HTTP, and WebSocket communique with servers. The usage of the gRPC-Gateway, those current gear (e.g., cURL, HTTPie, postman) can be utilized as is. Since no customized protocol is uncovered past the gRPC-Gateway, complexity (for imposing shoppers for customized protocols) is eradicated (e.g., no wish to put in force a gRPC generator for Kotlin or Swift to fortify Android or Swift).

- Scalability: Same old HTTP load balancing tactics may also be implemented through putting a load-balancer in entrance of the gRPC-Gateway to distribute requests throughout a couple of gRPC carrier hosts. Construction a protocol/service-specific load balancer isn’t a very easy or rewarding process.

Evaluation

You will have already guessed: protoc plugins once more come to the rescue. In our carrier’s Makefile (see Section 1), we generated messages and repair stubs for Move the usage of the protoc-gen-go plugin:

protoc --go_out=$OUT_DIR --go_opt=paths=source_relative

--go-grpc_out=$OUT_DIR --go-grpc_opt=paths=source_relative

--proto_path=$PROTO_DIR

$PROTO_DIR/onehub/v1/*.proto

A Temporary Advent to Plugins

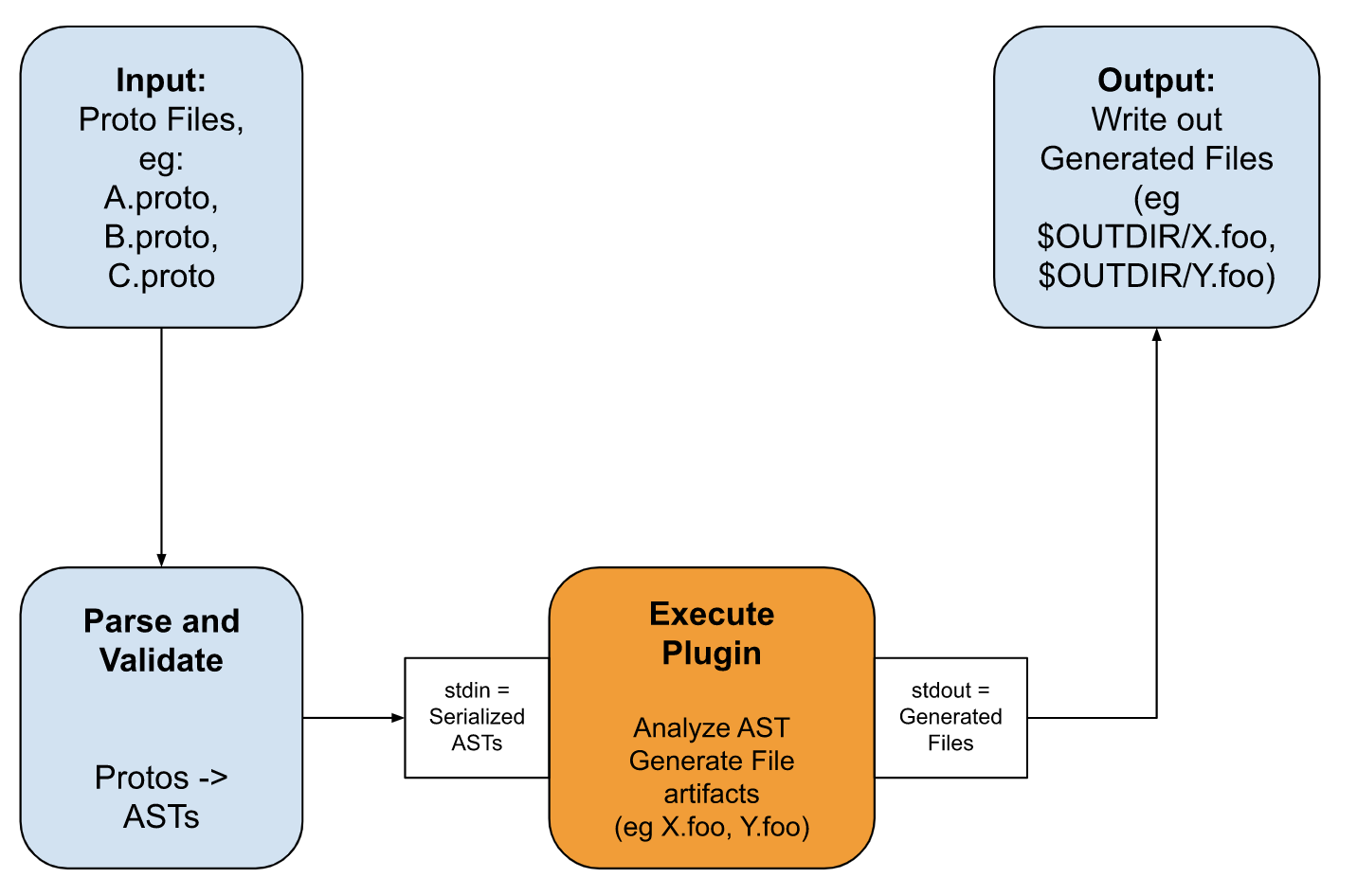

The magic of the protoc plugin is that it does now not carry out any technology by itself, however orchestrates plugins through passing the parsed Summary Syntax Tree (AST) throughout plugins. That is illustrated beneath:

- Step 0: Enter recordsdata (within the above case, onehub/v1/*.proto) are handed to the

protocplugin. - Step 1: The

protocdevice first parses and validates all proto recordsdata. - Step 2:

protocthen invokes each and every plugin in its record command line arguments in flip through passing a serialized model of all the proto recordsdata it has parsed into an AST. - Step 3: Each and every proto plugin (on this case,

moveandgo-grpcreads this serialized AST by way of itsstdin. The plugin processes/analyzes those AST representations and generates record artifacts.- Observe that there does now not wish to be a 1:1 correspondence between enter recordsdata (e.g., A.proto, B.proto, C.proto) and the output record artifacts it generates. As an example, the plugin might create a “unmarried” unified record artifact encompassing the entire knowledge in the entire enter protos.

- The plugin writes out the generated record artifacts onto its

stdout.

- Step 4:

protocdevice captures the plugin’sstdoutand for each and every generated record artifact, serializes it onto disk.

Questions

Any command line argument to protoc within the structure --<pluginname>_out is a plugin indicator with the identify “pluginname”. Within the above instance, protoc would have encountered two plugins: move and go-grpc.

protoc makes use of a practice of discovering an executable with the identify protoc-gen-<pluginname>. This executable should be discovered within the folders within the $PATH variable. Since plugins are simply undeniable executables those may also be written in any language.

The cord structure for the AST isn’t wanted. protoc has libraries (in numerous languages) that may be incorporated through the executables that may deserialize ASTs from stdin and serialize generated record artifacts onto stdout.

Setup

As you could have guessed (once more), our plugins can even wish to be put in sooner than they are able to be invoked through protoc. We will set up the gRPC-Gateway plugins.

For an in depth set of directions, observe the gRPC-Gateway set up setup. In short:

move get

github.com/grpc-ecosystem/grpc-gateway/v2/protoc-gen-grpc-gateway

github.com/grpc-ecosystem/grpc-gateway/v2/protoc-gen-openapiv2

google.golang.org/protobuf/cmd/protoc-gen-go

google.golang.org/grpc/cmd/protoc-gen-go-grpc

# Set up after the get is needed

move set up

github.com/grpc-ecosystem/grpc-gateway/v2/protoc-gen-grpc-gateway

github.com/grpc-ecosystem/grpc-gateway/v2/protoc-gen-openapiv2

google.golang.org/protobuf/cmd/protoc-gen-go

google.golang.org/grpc/cmd/protoc-gen-go-grpc

This may set up the next 4 plugins on your $GOBIN folder:

protoc-gen-grpc-gateway– The GRPC Gateway generatorprotoc-gen-openapiv2– Swagger/OpenAPI spec generatorprotoc-gen-go– The Move protobufprotoc-gen-go-grpc– Move gRPC server stub and Jstomer generator

Make certain that your “GOBIN” folder is on your PATH.

Upload Makefile Objectives

Assuming you might be the usage of the instance from Section 1, upload an additional goal to the Makefile:

gwprotos:

echo "Producing gRPC Gateway bindings and OpenAPI spec"

protoc -I . --grpc-gateway_out $(OUT_DIR)

--grpc-gateway_opt logtostderr=true

--grpc-gateway_opt paths=source_relative

--grpc-gateway_opt generate_unbound_methods=true

--proto_path=$(PROTO_DIR)/onehub/v1/

$(PROTO_DIR)/onehub/v1/*.proto

Understand how the parameter varieties are very similar to one in Section 1 (after we have been producing move bindings). For each and every record X.proto, identical to the move and go-grpc plugin, an X.pb.gw.move record is created that incorporates the HTTP bindings for our carrier.

Customizing the Generated HTTP Bindings

Within the earlier sections .pb.gw.move recordsdata have been created containing default HTTP bindings of our respective services and products and strategies. It is because we had now not supplied any URL bindings, HTTP verbs (GET, POST, and so forth.), or parameter mappings. We will deal with that shortcoming now through including customized HTTP annotations to the carrier’s definition.

Whilst all our services and products have a identical construction, we can have a look at the Matter carrier for its HTTP annotations.

Matter carrier with HTTP annotations:

syntax = "proto3";

import "google/protobuf/field_mask.proto";

possibility go_package = "github.com/onehub/protos";

package deal onehub.v1;

import "onehub/v1/fashions.proto";

import "google/api/annotations.proto";

/**

* Provider for working on subjects

*/

carrier TopicService {

/**

* Create a brand new sesssion

*/

rpc CreateTopic(CreateTopicRequest) returns (CreateTopicResponse) {

possibility (google.api.http) = {

submit: "/v1/subjects",

frame: "*",

};

}

/**

* Checklist all subjects from a consumer.

*/

rpc ListTopics(ListTopicsRequest) returns (ListTopicsResponse) {

possibility (google.api.http) = {

get: "/v1/subjects"

};

}

/**

* Get a selected subject

*/

rpc GetTopic(GetTopicRequest) returns (GetTopicResponse) {

possibility (google.api.http) = {

get: "/v1/subjects/{identification=*}"

};

}

/**

* Batch get a couple of subjects through ID

*/

rpc GetTopics(GetTopicsRequest) returns (GetTopicsResponse) {

possibility (google.api.http) = {

get: "/v1/subjects:batchGet"

};

}

/**

* Delete a selected subject

*/

rpc DeleteTopic(DeleteTopicRequest) returns (DeleteTopicResponse) {

possibility (google.api.http) = {

delete: "/v1/subjects/{identification=*}"

};

}

/**

* Updates particular fields of a subject matter

*/

rpc UpdateTopic(UpdateTopicRequest) returns (UpdateTopicResponse) {

possibility (google.api.http) = {

patch: "/v1/subjects/{subject.identification=*}"

frame: "*"

};

}

}

/**

* Matter introduction request object

*/

message CreateTopicRequest {

/**

* Matter being up to date

*/

Matter subject = 1;

}

/**

* Reaction of an subject introduction.

*/

message CreateTopicResponse {

/**

* Matter being created

*/

Matter subject = 1;

}

/**

* An subject seek request. For now handiest paginations params are supplied.

*/

message ListTopicsRequest {

/**

* As an alternative of an offset an summary "web page" secret is only if provides

* an opaque "pointer" into some offset in a outcome set.

*/

string page_key = 1;

/**

* Selection of effects to go back.

*/

int32 page_size = 2;

}

/**

* Reaction of a subject matter seek/list.

*/

message ListTopicsResponse {

/**

* The record of subjects discovered as a part of this reaction.

*/

repeated Matter subjects = 1;

/**

* The important thing/pointer string that next Checklist requests will have to go to

* proceed the pagination.

*/

string next_page_key = 2;

}

/**

* Request to get an subject.

*/

message GetTopicRequest {

/**

* ID of the subject to be fetched

*/

string identification = 1;

}

/**

* Matter get reaction

*/

message GetTopicResponse {

Matter subject = 1;

}

/**

* Request to batch get subjects

*/

message GetTopicsRequest {

/**

* IDs of the subject to be fetched

*/

repeated string ids = 1;

}

/**

* Matter batch-get reaction

*/

message GetTopicsResponse {

map<string, Matter> subjects = 1;

}

/**

* Request to delete an subject.

*/

message DeleteTopicRequest {

/**

* ID of the subject to be deleted.

*/

string identification = 1;

}

/**

* Matter deletion reaction

*/

message DeleteTopicResponse {

}

/**

* The request for (partly) updating an Matter.

*/

message UpdateTopicRequest {

/**

* Matter being up to date

*/

Matter subject = 1;

/**

* Masks of fields being up to date on this Matter to make partial adjustments.

*/

google.protobuf.FieldMask update_mask = 2;

/**

* IDs of customers to be added to this subject.

*/

repeated string add_users = 3;

/**

* IDs of customers to be got rid of from this subject.

*/

repeated string remove_users = 4;

}

/**

* The request for (partly) updating an Matter.

*/

message UpdateTopicResponse {

/**

* Matter being up to date

*/

Matter subject = 1;

}

As an alternative of getting “empty” manner definitions (e.g., rpc MethodName(ReqType) returns (RespType) {}), we are actually seeing “annotations” being added within strategies. Any selection of annotations may also be added and each and every annotation is parsed through the protoc and handed to the entire plugins invoked through it. There are heaps of annotations that may be handed and this has a “little bit of the whole thing” in it.

Again to the HTTP bindings: Most often an HTTP annotation has a technique, a URL trail (with bindings inside of { and }), and a marking to suggest what the frame parameter maps to (for PUT and POST strategies).

As an example, within the CreateTopic manner, the process is a POST request to “v1/subject ” with the frame (*) akin to the JSON illustration of the CreateTopicRequest message kind; i.e., our request is predicted to appear to be this:

{

"Matter": {... subject object...}

}

Naturally, the reaction object of this will be the JSON illustration of the CreateTopicResponse message.

The opposite examples within the subject carrier in addition to within the different services and products are rather intuitive. Be happy to learn thru it to get any finer main points. Earlier than we’re off to the following segment imposing the proxy, we wish to regenerate the pb.gw.move recordsdata to include those new bindings:

make all

We will be able to now see the next error:

google/api/annotations.proto: Document now not discovered.

subjects.proto:8:1: Import "google/api/annotations.proto" was once now not discovered or had mistakes.

Sadly, there’s no “package deal supervisor” for protos at the moment. This void is being stuffed through an awesome device: Buf.construct (which would be the major subject in Section 3 of this sequence). Within the period in-between, we can unravel this through manually copying (shudder) http.proto and annotations.proto manually.

So, our protos folder may have the next construction:

protos

├── google

│ └── api

│ ├── annotations.proto

│ └── http.proto

└── onehub

└── v1

└── subjects.proto

└── messages.proto

└── ...

Then again, we can observe a fairly other construction. As an alternative of copying recordsdata to the protos folder, we can create a distributors folder on the root and symlink to it from the protos folder (this symlinking can be looked after through our Makefile). Our new folder construction is:

onehub

├── Makefile

├── ...

├── distributors

│ ├── google

│ │ └── api

│ │ ├── annotations.proto

│ │ └── http.proto

├── proto

└── google -> onehub/distributors/google

└── onehub

└── v1

└── subjects.proto

└── messages.proto

└── ...

Our up to date Makefile is proven beneath.

Makefile for HTTP bindings:

# Some vars to detemrine move places and so forth

GOROOT=$(which move)

GOPATH=$(HOME)/move

GOBIN=$(GOPATH)/bin

# Evaluates the abs trail of the listing the place this Makefile is living

SRC_DIR:=$(shell dirname $(realpath $(firstword $(MAKEFILE_LIST))))

# The place the protos exist

PROTO_DIR:=$(SRC_DIR)/protos

# the place we need to generate server stubs, shoppers and so forth

OUT_DIR:=$(SRC_DIR)/gen/move

all: createdirs printenv goprotos gwprotos openapiv2 cleanvendors

goprotos:

echo "Producing GO bindings"

protoc --go_out=$(OUT_DIR) --go_opt=paths=source_relative

--go-grpc_out=$(OUT_DIR) --go-grpc_opt=paths=source_relative

--proto_path=$(PROTO_DIR)

$(PROTO_DIR)/onehub/v1/*.proto

gwprotos:

echo "Producing gRPC Gateway bindings and OpenAPI spec"

protoc -I . --grpc-gateway_out $(OUT_DIR)

--grpc-gateway_opt logtostderr=true

--grpc-gateway_opt paths=source_relative

--grpc-gateway_opt generate_unbound_methods=true

--proto_path=$(PROTO_DIR)

$(PROTO_DIR)/onehub/v1/*.proto

openapiv2:

echo "Producing OpenAPI specifications"

protoc -I . --openapiv2_out $(SRC_DIR)/gen/openapiv2

--openapiv2_opt logtostderr=true

--openapiv2_opt generate_unbound_methods=true

--openapiv2_opt allow_merge=true

--openapiv2_opt merge_file_name=allservices

--proto_path=$(PROTO_DIR)

$(PROTO_DIR)/onehub/v1/*.proto

printenv:

@echo MAKEFILE_LIST=$(MAKEFILE_LIST)

@echo SRC_DIR=$(SRC_DIR)

@echo PROTO_DIR=$(PROTO_DIR)

@echo OUT_DIR=$(OUT_DIR)

@echo GOROOT=$(GOROOT)

@echo GOPATH=$(GOPATH)

@echo GOBIN=$(GOBIN)

createdirs:

rm -Rf $(OUT_DIR)

mkdir -p $(OUT_DIR)

mkdir -p $(SRC_DIR)/gen/openapiv2

cd $(PROTO_DIR) && (

if [ ! -d google ]; then ln -s $(SRC_DIR)/distributors/google . ; fi

)

cleanvendors:

rm -f $(PROTO_DIR)/google

Now working Make will have to be error-free and outcome within the up to date bindings within the .pb.gw.move recordsdata.

Imposing the HTTP Gateway Proxy

Lo and behold, we’ve got a “proxy” (within the .pw.gw.move recordsdata) that interprets HTTP requests and converts them into gRPC requests. At the go back trail, gRPC responses also are translated to HTTP responses. What’s now wanted is a carrier that runs an HTTP server that continuosly facilitates this translation.

We’ve now added a startGatewayService manner in cmd/server.move that now additionally begins an HTTP server to do all this back-and-forth translation:

import (

... // earlier imports

// new imports

"context"

"internet/http"

"github.com/grpc-ecosystem/grpc-gateway/v2/runtime"

)

func startGatewayServer(grpc_addr string, gw_addr string) {

ctx := context.Background()

mux := runtime.NewServeMux()

opts := []grpc.DialOption{grpc.WithInsecure()}

// Sign up each and every server with the mux right here

if err := v1.RegisterTopicServiceHandlerFromEndpoint(ctx, mux, grpc_addr, opts); err != nil {

log.Deadly(err)

}

if err := v1.RegisterMessageServiceHandlerFromEndpoint(ctx, mux, grpc_addr, opts); err != nil {

log.Deadly(err)

}

http.ListenAndServe(gw_addr, mux)

}

func major() {

flag.Parse()

move startGRPCServer(*addr)

startGatewayServer(*gw_addr, *addr)

}

On this implementation, we created a brand new runtime.ServeMux and registered each and every of our gRPC services and products’ handlers the usage of the v1.Sign up<ServiceName>HandlerFromEndpoint manner. This system pals all the URLs discovered within the <ServiceName> carrier’s protos to this actual mux. Observe how a majority of these handlers are related to the port on which the gRPC carrier is already working (port 9000 through default). In any case, the HTTP server is began by itself port (8080 through default).

You may well be questioning why we’re the usage of the NewServeMux within the github.com/grpc-ecosystem/grpc-gateway/v2/runtime module and now not the model within the common library’s internet/http module.

It is because the grpc-gateway/v2/runtime module’s ServeMux is ready-made to behave particularly as a router for the underlying gRPC services and products it’s fronting. It additionally accepts an inventory of ServeMuxOption (ServeMux handler) strategies that act as a middleware for intercepting an HTTP name this is within the technique of being transformed to a gRPC message despatched to the underlying gRPC carrier. Those middleware can be utilized to set additional metadata wanted through the gRPC carrier in a commonplace approach transparently. We will be able to see extra about this in a long run submit about gRPC interceptors on this demo carrier.

Producing OpenAPI Specifications

A number of API shoppers search OpenAPI specifications that describe RESTful endpoints (strategies, verbs, frame payloads, and so forth). We will generate an OpenAPI spec record (prior to now Swagger recordsdata) that incorporates details about our carrier strategies together with their HTTP bindings. Upload some other Makefile goal:

openapiv2:

echo "Producing OpenAPI specifications"

protoc -I . --openapiv2_out $(SRC_DIR)/gen/openapiv2

--openapiv2_opt logtostderr=true

--openapiv2_opt generate_unbound_methods=true

--openapiv2_opt allow_merge=true

--openapiv2_opt merge_file_name=allservices

--proto_path=$(PROTO_DIR)

$(PROTO_DIR)/onehub/v1/*.proto

Like any different plugins, the openapiv2 plugin additionally generates one .swagger.json in step with .proto record. Then again, this adjustments the semantics of Swagger as each and every Swagger is handled as its personal “endpoint.” While, in our case, what we truly need is a unmarried endpoint that fronts the entire services and products. To be able to include a unmarried “merged” Swagger record, we go the allow_merge=true parameter to the above command. As well as, we additionally go the identify of the record to be generated (merge_file_name=allservices). This leads to gen/openapiv2/allservices.swagger.json record that may be learn, visualized, and examined with SwaggerUI.

Get started this new server and also you will have to see one thing like this:

% onehub % move run cmd/server.move

Beginning grpc endpoint on :9000:

Beginning grpc gateway server on: :8080

The extra HTTP gateway is now working on port 8080 which we can question subsequent.

Trying out It All Out

Now as an alternative of constructing grpc_cli calls, we will be able to factor HTTP calls by way of the ever present curl command (additionally remember to set up jq for beautiful printing your JSON output):

Create a Matter

% curl -s -d '{"subject": {"identify": "First Matter", "creator_id": "user1"}}' localhost:8080/v1/subjects | jq

{

"subject": {

"createdAt": "2023-07-07T20:53:31.629771Z",

"updatedAt": "2023-07-07T20:53:31.629771Z",

"identification": "1",

"creatorId": "user1",

"identify": "First Matter",

"customers": []

}

}

And some other:

% curl -s localhost:8080/v1/subjects -d '{"subject": {"identify": "Pressing subject", "creator_id": "user2", "customers": ["user1", "user2", "user3"]}}' |

jq

{

"subject": {

"createdAt": "2023-07-07T20:56:52.567691Z",

"updatedAt": "2023-07-07T20:56:52.567691Z",

"identification": "2",

"creatorId": "user2",

"identify": "Pressing subject",

"customers": [

"user1",

"user2",

"user3"

]

}

}

Checklist All Subjects

% curl -s localhost:8080/v1/subjects | jq

{

"subjects": [

{

"createdAt": "2023-07-07T20:53:31.629771Z",

"updatedAt": "2023-07-07T20:53:31.629771Z",

"id": "1",

"creatorId": "user1",

"name": "First Topic",

"users": []

},

{

"createdAt": "2023-07-07T20:56:52.567691Z",

"updatedAt": "2023-07-07T20:56:52.567691Z",

"identification": "2",

"creatorId": "user2",

"identify": "Pressing subject",

"customers": [

"user1",

"user2",

"user3"

]

}

],

"nextPageKey": ""

}

Get Subjects through IDs

Right here, “record” values (e.g., identifications) are most likely through repeating them as question parameters:

% curl -s "localhost:8080/v1/subjects?ids=1&ids=2" | jq

{

"subjects": [

{

"createdAt": "2023-07-07T20:53:31.629771Z",

"updatedAt": "2023-07-07T20:53:31.629771Z",

"id": "1",

"creatorId": "user1",

"name": "First Topic",

"users": []

},

{

"createdAt": "2023-07-07T20:56:52.567691Z",

"updatedAt": "2023-07-07T20:56:52.567691Z",

"identification": "2",

"creatorId": "user2",

"identify": "Pressing subject",

"customers": [

"user1",

"user2",

"user3"

]

}

],

"nextPageKey": ""

}

Delete a Matter Adopted through a Checklist

% curl -sX DELETE "localhost:8080/v1/subjects/1" | jq

{}

% curl -s "localhost:8080/v1/subjects" | jq

{

"subjects": [

{

"createdAt": "2023-07-07T20:56:52.567691Z",

"updatedAt": "2023-07-07T20:56:52.567691Z",

"id": "2",

"creatorId": "user2",

"name": "Urgent topic",

"users": [

"user1",

"user2",

"user3"

]

}

],

"nextPageKey": ""

}

Easiest Practices

Separation of Gateway and gRPC Endpoints

In our instance, we served the Gateway and gRPC services and products on their very own addresses. As an alternative, we can have immediately invoked the gRPC carrier strategies; i.e., through immediately developing NewTopicService(nil) and invoking strategies on the ones. Then again, working those two services and products one by one supposed we can have different (interior) services and products immediately get entry to the gRPC carrier as an alternative of going throughout the Gateway. This separation of issues additionally supposed those two services and products may well be deployed one by one (when on other hosts) as an alternative of wanting a complete improve of all the stack.

HTTPS As an alternative of HTTP

Although on this instance the startGatewayServer manner began an HTTP server, it’s extremely really useful to have the gateway over an HTTP server for safety, fighting man-in-the-middle assaults, and protective shoppers’ information.

Use of Authentication

This situation didn’t have any authentication inbuilt. Then again, authentication (authn) and authorization (authz) are crucial pillars of any carrier. The Gateway (and the gRPC carrier) are not any exceptions to this. The usage of middleware to take care of authn and authz is important to the gateway. Authentication may also be implemented with a number of mechanisms like OAuth2 and JWT to make sure customers sooner than passing a request to the gRPC carrier. However, the tokens may well be handed as metadata to the gRPC carrier, which will carry out the validation sooner than processing the request. The usage of middleware within the Gateway (and interceptors within the gRPC carrier) can be proven in Section 4 of this sequence.

Caching for Progressed Efficiency

Caching improves efficiency through warding off database (or heavy) lookups of information that can be often accessed (and/or now not regularly changed). The Gateway server too can make use of cache responses from the gRPC carrier (with conceivable expiration timeouts) to cut back the weight at the gRPC server and strengthen reaction occasions for shoppers.

Observe: Similar to authentication, caching can be carried out on the gRPC server. Then again, this could now not save you extra calls that can another way had been averted through the gateway carrier.

The usage of Load Balancers

Whilst additionally appropriate to gRPC servers, HTTP load balancers (in entrance of the Gateway) permit sharding to strengthen the scalability and reliability of our services and products, particularly right through high-traffic classes.

Conclusion

By way of including a gRPC Gateway in your gRPC services and products and making use of perfect practices, your services and products can now be uncovered to shoppers the usage of other platforms and protocols. Adhering to perfect practices additionally guarantees reliability, safety, and excessive efficiency.

On this article, we now have:

- Observed the advantages of wrapping our services and products with a Gateway carrier

- Added HTTP bindings to an current set of services and products

- Discovered the most productive practices for enacting Gateway services and products over your gRPC services and products