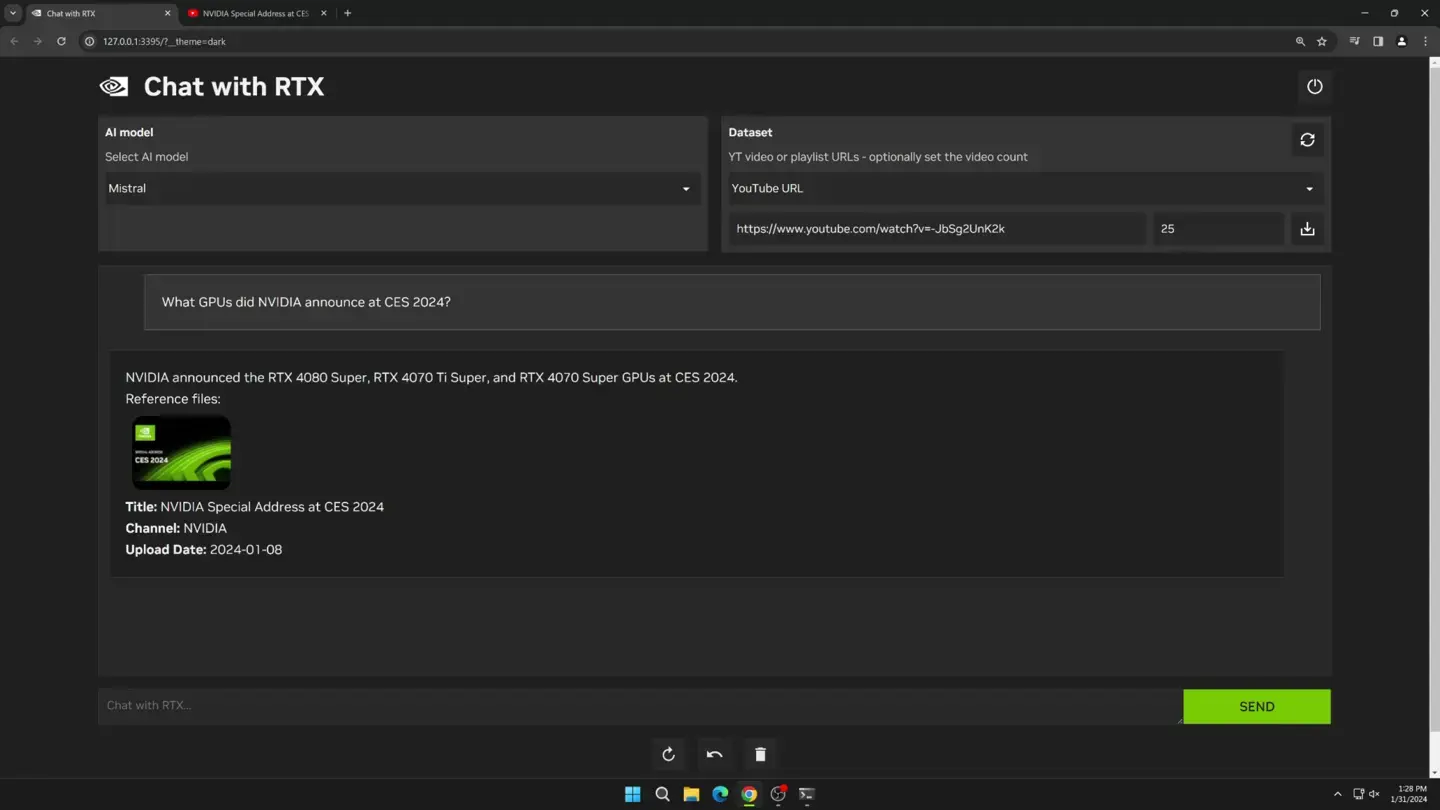

NVIDIA has not too long ago presented a groundbreaking AI chatbot, Chat with RTX. It’s designed to run in the neighborhood on Home windows PCs provided with NVIDIA RTX 30 or 40-series GPUs. This leading edge instrument lets in customers to personalize a chatbot with their content material. It helps to keep delicate knowledge on their units and avoids the will for cloud-based services and products. The “Chat with RTX” chatbot is designed as a localized device that customers can use with out get entry to to the Web. All GeForce RTX 30 and 40 GPUs with a minimum of 8 GB of video reminiscence improve the app.

Chat with RTX helps more than one document codecs, together with textual content, pdf, document/docx, and XML. Simply level the app to the folder containing the recordsdata and it’s going to load them into the library in seconds. Additionally, customers can give you the URL of a YouTube playlist and the app will load the transcripts of the movies within the playlist. This permits the person to question the content material they duvet.

Judging from the authentic description, customers can use Chat With RTX in the similar method as the use of ChatGPT thru other queries. Alternatively, the generated effects might be fully in line with explicit knowledge units. This appears to be extra appropriate for operations reminiscent of producing summaries and temporarily looking out paperwork.

Having an RTX GPU with TensorRT-LLM improve approach customers will paintings with all knowledge and initiatives in the neighborhood. Thus, there might be no use for customers to avoid wasting their knowledge within the cloud. This may increasingly save time and supply extra correct effects. Nvidia stated that TensorRT-LLM v0.6.0 will reinforce efficiency via 5 occasions and might be introduced later this month. Additionally, it’s going to improve different LLMs reminiscent of Mistral 7B and Nemotron 3 8B.

Gizchina Information of the week

Key Options of Chat with RTX

- Native Processing: Chat with RTX runs in the neighborhood on Home windows RTX PCs and workstations, offering speedy responses and conserving person knowledge personal.

- Personalization: Customers can customise the chatbot with their content material, together with textual content recordsdata, PDFs, DOC/DOCX, XML, and YouTube movies.

- Retrieval-Augmented Technology (RAG): The chatbot makes use of RAG, NVIDIA TensorRT-LLM instrument, and NVIDIA RTX acceleration to generate content material and supply contextually related solutions.

- Open-Supply Massive Language Fashions (LLMs): Customers can make a choice from two open-source LLMs, Mistral or Llama 2, to coach their chatbot.

- Developer-Pleasant: Chat with RTX is constructed from the TensorRT-LLM RAG developer reference venture, to be had on GitHub, permitting builders to construct their RAG-based packages.

Necessities and Barriers

- {Hardware} Necessities: Chat with RTX calls for an NVIDIA GeForce RTX 30 Collection GPU or upper with a minimum of 8GB of VRAM, Home windows 10 or 11, and the most recent NVIDIA GPU drivers.

- Measurement: The chatbot is a 35GB obtain, and the Python example takes up round 3GB of RAM.

- The chatbot is within the early developer demo level, thus it nonetheless has restricted context reminiscence and misguided supply attribution.

Packages and Advantages

- Information Analysis: Chat with RTX is usually a precious instrument for knowledge analysis, particularly for reporters or any person who wishes to research a number of paperwork.

- Privateness and Safety: By way of conserving knowledge and responses limited to the person’s native surroundings, there’s a vital aid within the chance of disclosing delicate knowledge externally.

- Training and Studying**: Chat with RTX can give fast tutorials and how-tos in line with best instructional sources.

Conclusion

Chat with RTX is a thrilling building on the earth of AI, providing a in the neighborhood run, personalised chatbot that may spice up employee productiveness whilst lowering privateness considerations. As an early developer demo, it nonetheless has some boundaries, however it presentations the possibility of accelerating LLMs with RTX GPUs and the promise of what an AI chatbot can do in the neighborhood in your PC one day. What do you take into consideration this new function? Tell us your ideas within the remark segment underneath