When records is analyzed and processed in genuine time, it may yield insights and actionable data both in an instant or with little or no lengthen from the time the knowledge is gathered. The capability to assemble, maintain, and retain user-generated records in genuine time is a very powerful for plenty of programs in these days’s data-driven surroundings.

There are more than a few tactics to emphasise the importance of real-time records analytics like well timed decision-making, IoT and sensor records processing, enhanced buyer revel in, proactive downside answer, fraud detection and safety, and many others. Emerging to the calls for of various real-time records processing situations, Apache Kafka has established itself as a loyal and scalable match streaming platform.

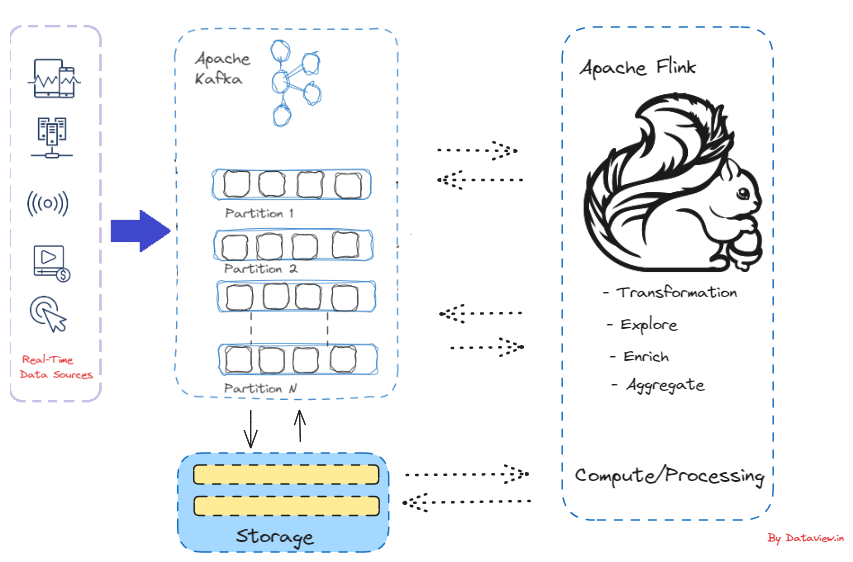

In brief, the method of gathering records in real-time as streams of occasions from match assets comparable to databases, sensors, and device programs is referred to as match streaming. With real-time records processing and analytics in thoughts, Apache Flink is a potent open-source program. For eventualities the place fast insights and minimum processing latency are essential, it provides a constant and efficient platform for managing steady streams of knowledge.

Reasons for the Stepped forward Collaboration Between Apache Flink and Kafka

- Apache Flink joined the Apache Incubator in 2014, and because its inception, Apache Kafka has persistently stood out as one of the vital steadily applied connectors for Apache Flink. It’s only an information processing engine that may be clubbed with the processing good judgment however does no longer supply any garage mechanism. Since Kafka supplies the foundational layer for storing streaming records, Flink can function a computational layer for Kafka, powering real-time programs and pipelines.

- Apache Flink has produced first-rate beef up for developing Kafka-based apps all over the years. Through the use of the a lot of products and services and assets introduced by means of the Kafka ecosystem, Flink programs are in a position to leverage Kafka as each a supply and a sink. Avro, JSON, and Protobuf are only some broadly used codecs that Flink natively helps.

- Apache Kafka proved to be a particularly appropriate fit for Apache Flink. In contrast to selection programs comparable to ActiveMQ, RabbitMQ, and many others., Kafka provides the aptitude to durably retailer records streams indefinitely, enabling shoppers to learn streams in parallel and replay them as vital. This aligns with Flink’s dispensed processing style and fulfills a a very powerful requirement for Flink’s fault tolerance mechanism.

- Kafka can be utilized by means of Flink programs as a supply in addition to a sink by using the various equipment and products and services to be had within the Kafka ecosystem. Flink provides local beef up for often used codecs like Avro, JSON, and Protobuf, very similar to Kafka’s beef up for those codecs.

- Different exterior programs may also be connected to Flink’s Desk API and SQL techniques to learn and write batch and streaming tables. Get admission to to records saved in exterior programs comparable to a record machine, database, message queue, or key-value retailer is made conceivable by means of a desk supply. For Kafka, it’s not anything however a key-value pair. Occasions are added to the Flink desk in a an identical means as they’re appended to the Kafka subject. An issue in a Kafka cluster is mapped to a desk in Flink. In Flink, each and every desk is the same as a circulation of occasions that describe the changes being made to that specific desk. The desk is mechanically up to date when a question refers to it, and its effects are both materialized or emitted.

Conclusion

In conclusion, we will create dependable, scalable, low-latency real-time records processing pipelines with fault tolerance and exactly-once processing promises by means of combining Apache Flink and Apache Kafka. For companies wishing to in an instant assessment and achieve insights from streaming records, this mix supplies a potent choice.

Thanks for studying this write-up. In the event you discovered this content material precious, please believe liking and sharing.